The hard part is defining your estimand

Thinking through what it means for a default study to 'work' or not.

An estimand is the thing a study tries to estimate. It’s the quantity (or quality!) of substantive/empirical/theoretical interest. An outcome is how you try to operationalize your estimand; a measurement strategy is how you try to collect data on it. If you’re lucky, your outcome is your estimand, full stop (perhaps you study the effects of medicaid enrollment on mortality, and you actually have mortality data). If you study how menu design affects sustainable food choices, however, and you record hypothetical selections, they’re probably a little further apart.

Defining your estimand is hard. It’s not a statistical question.1 It’s something you need to think about.

In whatever-you’d-call-the-field-I’m-in,2 we don’t always agree about the “key” estimand. I focus on net reductions in meat consumption. Your focus might be different. If you care most about global warming, getting people to switch from beef to just about anything else is a win. Alternatively, if you are concerned with the salt content in fish sauce, you might try get people to take a smaller serving of it. By contrast, I care about how many fish suffered, so for the fish sauce study, I’d want to see the net fish sauce consumption rather than consumption per person. So long as you report the number of fish-sauce-eaters and how much fish sauce they consumed on average, we can both get what we want out of the results.

Sometimes it ain’t so easy. Consider two examples from the defaults literature.

Plant-based defaults at catered events

Boronowsky et al. (2022) study the effects of plant-based defaults on catered events on two college campuses and find that “participants assigned to the plant-based default were 3.52 (95% CI: [2.44, 5.09]) times more likely to select plant-based meals.” Good stuff! However readers might wonder if people might then eat more meat at a future meal. These authors respond by saying that this is a subject for future work and by noting that “[p]revious studies investigating the default nudge have explored and shown positive effects of the intervention over time and partial persistence of behavior change after the intervention ended (Kurz, 2018).”

Here’s a different way to approach this: define your estimand as the amount of meat eaten at the specific catered events where you change the default. You are not responsible for everything people do all the time. If you’re a conference organizer, the core thing you care about and control is your conference’s carbon footprint.

True, if I write a meta-analysis about net reductions in meat consumption, I might not include your study in the database if I think that the estimands are too different. But that’s not a knock against the original study.3

Plant-based defaults in cafeterias: a harder case

Ginn & Sparkman (2024) also study plant-based defaults in a college setting, but their intervention takes place on randomly assigned days at three universities’ cafeterias. (Some dining stations effectively hid the meat on treatment days, with signs letting people know that meat was available upon request.) The study found large effects, but the case is complicated for two reasons. First, not all the treatment units actually received treatment, in part because at one site, “dining staff may have inadvertently promoted the meat dish on plant-based default days.” Second, students could walk away.

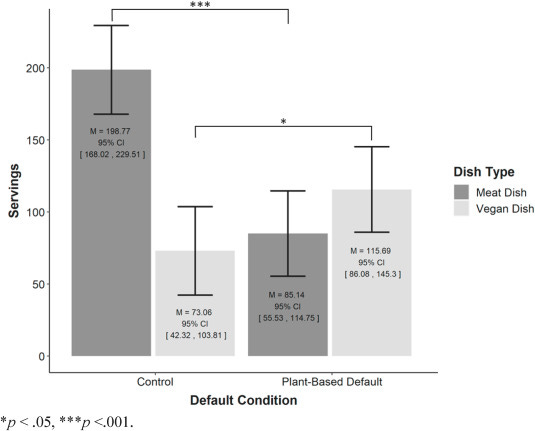

Figure 2 tells the story:

On intervention days, meat sales went down and plant-sales went up, but the decline in meat selection is larger than the increase in plant-based sales. What seems to have happened is that some people approached the station, saw there wasn’t any meat, and bailed. Here’s how the authors address this:

There was a 26.1% reduction in total servings on plant-based default days. This may partially contribute to the decrease in meat dishes sold, with some likely opting for meat dishes at other stations in the cafeteria. Notably, even if we assume 100% of these patrons selected a meat dish elsewhere—a worst-case scenario for the intervention—we still would have an estimated 21.4% reduction in meat dishes sold as a result of the intervention (i.e., adding the estimated attrition of default days compared to control days entirely to meat dishes sold on plant-based default days still would result in a reduction in meat dishes served).

If your estimand here is “number of people that chose meat,” great! But if you care about total amount of meat served, you need additional assumptions about the behavior of people who opted out to gauge that, i.e. that they ate basically the same amount of meat wherever they went as they would have at the intervention station. But if the people who opt out are the most hardcore carnivores, this might not hold. Facing a similar measurement challenge, Russo et al. (2025) implement a meat-free day and, likewise, find that it both reduces meat consumption and dining hall attendance. They also calculate that if about 9% of students who opted out get a burger off-campus instead, the intervention’s environmental benefits would be fully negated.

So if your estimand is “total amount of meat served” and you can’t measure that directly, either you or readers will have to impose some more structure to get there. On the other hand, if the main thing you care about is making plant-based options more normal (more people eating them, being seen eating them, telling their friends about them, etc.), then measuring the number of people who eat plant-based meals is perfect.

Either way, it comes down to defining your estimand.

In a related vein, Andrew Gelman says the most neglected topic in statistics is measurement, “the connection between the data you gather and the underlying object of your study."

Animal welfare studies? Behavioral science with application to ending factory farming?

I am a strong believer that meta-analyses should be “selective, not comprehensive.”

Really cool! This is something I hadn't thought about. Thanks for sharing.

I agree -- in particular, I think behavioral spillover (potentially both good and bad) is the biggest set of unanswered questions in defaults as an intervention.